AI Is Ready. Enterprises Aren't – The Data Gap

Agentic AI is transforming business, but most enterprises aren’t ready—data is the bottleneck. Autonify.ai’s AI agents automate data management, turning complexity into clarity and enabling true AI readiness.

Agentic AI has firmly taken hold of the tech world. But unlike many passing trends, I believe AI isn’t just the latest fashion like we’ve seen with prior hype cycles (i.e., blockchain) —it’s here to stay. With its ability to reason, act autonomously, and adapt to context, AI is already reshaping business models and competitive landscapes.

Just look at OpenAI acquiring Windsurf last week for $3 billion or Cursor—a team of only 20 people—securing a $9 billion valuation. A decade ago, these ideas would have been laughed out of the room. Today, they’re indicators of a new normal.

And yet, here’s the paradox: while AI is ready, most enterprises aren’t. The biggest obstacle? Their data simply isn’t ready.

Stuck at the Starting Line

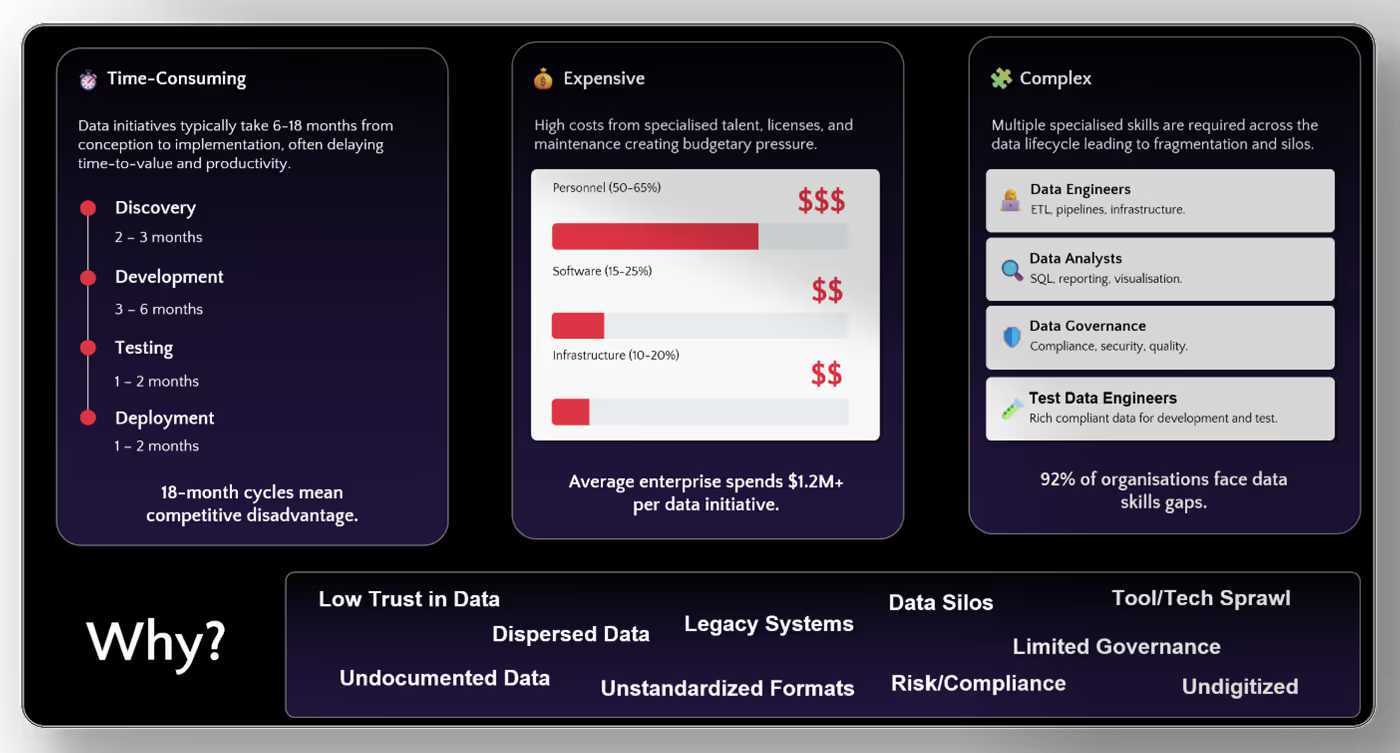

Let’s put it plainly – I keep saying it but data initiatives in the enterprise are not easy, not fast, and certainly not cheap.

We've had countless conversations with executives facing this exact dilemma. The pattern is consistent. Despite huge investments in data transformation programs, many enterprises remain stuck in first gear, unable to leverage AI meaningfully.

Why? Our analysis reveals that before AI can deliver insights, organisations typically spend 12 to 18 months just preparing data. That process includes:

• Discovering what data exists across siloed systems.

• Classifying it for sensitivity and ownership.

• Fixing quality issues (missing, outdated, inconsistent).

• Building pipelines and data warehouses to unify data for insights.

It’s a resource-heavy runway—and increasingly, one enterprises can’t afford. In a world where AI-native startups are iterating weekly, 12-month delays turn into strategic liabilities. The one major advantage enterprises still hold is their data—but only if they can unlock it’s value.

The Enterprise Data Gap

What's holding enterprises back from becoming AI-ready? Often, it's not one problem, it's many, compounding issues. I guarantee every enterprise in the world has at least two of these issues which slow them down.

Low Trust in Data - Poor quality, outdated or inconsistent data undermines downstream processes, resulting in incomplete dashboards and broken pipelines that ultimately lead to delayed or flawed data-driven decisions.

Undocumented & Dispersed Data - Data lives in too many places across too many systems—often without clear ownership or documentation.

Legacy Systems & Silos - Outdated architecture traps valuable data in isolated environments.

Technology Sprawl - Overlapping tools and platforms make alignment difficult, especially across departments.

Weak Governance & High Risk - Fear of compliance missteps often leads to paralysis, slowing down innovation.

Analog Workflows - Even in modern organisations, manual or semi-digital processes still create bottlenecks and errors. Most often then not this manifests as Excel spreadsheets (arguably the best and most problematic application ever invented).

Here’s a sobering stat: a typical enterprise data team has just 4–6 people—yet they’re expected to manage & solve these issues for more than 3,000 data sources. That’s not just inefficient. It’s an impossible equation.

Turning Challenges into Competitive Advantage

The good news? Every one of these problems is solvable. Through our work at Autonify.ai, we've developed a framework that helps enterprises evolve to agile, outcome-driven approaches.

1. Discovery: Illuminate the Landscape

Start by gaining visibility into your data ecosystem. Implement automated data discovery tools to map where data lives, how it's used, and who owns it. Cataloging data across the organisation lays the foundation for governance, quality, and compliance.

• Build an enterprise-wide data inventory.

• Tag data by sensitivity, ownership, and usage.

• Use metadata to drive context and findability.

2. Activation: Make Data Fit for Purpose

Once data is visible, make it usable. Focus on quality, standardisation, and enhancement. Leverage AI-powered data profiling to identify gaps, enrich information, and ensure consistency across systems.

• Continuously monitor and improve data quality

• Enable secure, firewalled access with mesh-based data sharing.

For AI development in regulated industries, synthetic data can be a lifesaver to enable safe, fast AI development.

3. Distribution: Deliver the Right Data to Teams, Systems & AI

Data only creates value when it’s accessible, not just by humans but AI and intelligent data products. That requires governed, self-service access to trusted data.

• Deploy data AI-ready data products and APIs

• Embed governance into distribution workflows to ensure AI-ready data quality

• Enable secure, role-based access for both teams and AI systems

When data is discoverable, secure, and structured for AI consumption teams can efficiently deliver AI data products that create business value from day one.

Introducing Agentic Data Management (ADM)

Based on these insights and the patterns we've observed while building Autonify.ai, we've developed a new approach to bridge the data gap—one built for the speed and scale of AI.

We call it Agentic Data Management: an emerging model that uses autonomous AI agents to handle core data lifecycle tasks: discovery, activation, and distribution. These agents don't replace data teams—they amplify them by automating repetitive, complex workflows. It's a leap forward from traditional, resource-heavy data management approaches—and it's built for the pace AI demands.

The Autonify.ai Advantage

This is exactly what we’ve built at Autonify.ai—a purpose-built Agentic Data Management platform for the AI-driven enterprise.

Instead of asking your teams to spend months untangling legacy systems, Autonify.ai deploys intelligent agents that handle the heavy lifting:

• Automating discovery through agents that continuously scan, classify, and catalog your data landscape

• Accelerating activation by intelligently preparing, enriching, and transforming data for AI consumption

• Streamlining distribution with secure, governed pathways for both human and AI systems to apply intelligence to data which ultimately creates business value.

I’ve spent the last decade watching enterprises spend millions on data programs that never quite cross the finish line. With Autonify, we're compressing timelines from months to minutes—so your data becomes a strategic asset, not a resource drain.